Work With AI Securely

AI is currently THE #1 TOPIC globally and an excellent technology, if used securely. We explain how.

AI is currently THE #1 TOPIC globally and an excellent technology, if used securely. We explain how.

The emergence of Artificial Intelligence, and in particular Large Language Models (LLMs) such as ChatGPT, has taken over the Internet and the world of work in the blink of an eye. Companies around the world are facing the challenge of how to make sense of these new technologies and not fall behind. It is therefore important not only to understand the areas of application for AI, but also to be aware of how it works and how to mitigate the security risks involved.

Can systems like ChatGPT really think? Are the answers always correct? And where does the knowledge actually come from?

In this know-how article, we take a deeper look at these questions and tell you what really matters when it comes to secure use of ChatGPT and the like.

AI systems like ChatGPT can be roughly described as "sentence completers." They are fed a variety of data, including web pages, books, online forums, and social media. This data is analyzed and broken down into individual fragments, such as words or even chunks of words. Using all this input data, the computer system creates statistical patterns and calculates the probability of which word should follow next.

A question such as "What color is snow?" is therefore not a real question for an AI system, to which it has a fixed answer. Rather, the system recognizes the beginning of a sentence and completes it based on statistical patterns and probabilities. For example, the most likely next word for the sentence "What color is snow?" would be "snow," followed by "is" and "white."

To us, that may look like an answer. But to the AI, it's just the most likely completion of the sentence based on the data it's been fed.

AI is continually being trained as well. If users provide feedback that an answer is not perfect, for example because snow can be other colors (such as slushy gray), the probabilities for the next word change and the sentence may be completed to "snow is usually white." In this way, the AI "learns".

Artificial intelligence will positively impact our everyday work, especially when it comes down to finding answers to standardized questions. However, we should never blindly trust the answers. After all, ChatGPT and the like can only output the probabilities for the next words. For example, if the topic "color of snow" was not previously included in the database and someone feeds the input "snow is blue" several times, the AI would complete the sentence just as naturally reuslting in the answer "Snow is blue". After all, this word combination would have the highest probability. So an AI works well for queries that have already been in the database countless times, but not necessarily for new of niche topics.

Please remember: an AI processes every interaction and every newly added word and integrates it into its following calculations and uses the input to expand its database. This is how machine learning works. Therefore, we should never feed public AI systems with sensitive information such as confidential documents, special software snippets, personal or customer data etc. All the data you enter will be processed and possibly integrated into future outputs. This could leak confidential information or personal data to the public. Therefore, please use AI systems with caution.

| Threat | Threat Example |

|---|---|

| Phishing & social engineering attacks | Criminals can use AI to fake motivation letters, emails or manipulate phone calls. |

| Informational leaks | Employees can feed AI with personal data or other sensitive data and thereby unconsciously publish confidential information. |

| Infection with malware | Criminals distribute free fake AI apps that steal sensitive data when installed. |

Artificial intelligence naturally not only offers diverse opportunities and advantages for companies, but also for criminals who are taking advantage of the hype surrounding AI.

This is precisely why the same principle applies in cyber security with regard to artificial intelligence: In most companies, you are not allowed to download software from the Internet on your own. In your private life, too, make sure that you only install software from reputable sources and read appropriate reviews beforehand.

Treat freely available AI systems like public cloud systems as you would social media. Any of your input can end up as output to someone else. Therefore:

Don't blindly trust the answers given out by the AI.

While these are often correct, they can also be:

AI will conquer the working world and is already in the midst of doing so.

That's why you should provide company-wide training on the topic of "using AI securely" and raise awareness of it:

In our protected demo area, you will find a cyber security training course on artificial intelligence designed by our security experts. This allows you to train your employees in the secure use of artificial intelligence.

Get a free demo account now and let's talk about your needs in a web meeting. We'll show you how to successfully strengthen security awareness in your company with employee training.

How to repackage familiar GDPR content into exciting online courses.

Find out how companies can properly motivate their employees to complete an e-learning course on cyber security, data protection or compliance.

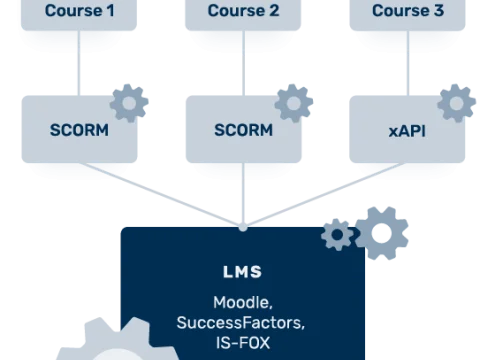

In this article, we explain the technical terms Scorm, LMS and e-learning course.

Technical settings and tips for LMS administrators